I recently came across a cute problem at Public Services and Procurement Canada while working on queue performance issues. Imagine a work environment where servers experience some kind of random interruption that prevents them from continuously working on a task. We’ve all been there—you’re trying to finish important work but you get interrupted by an urgent email or phone call. How can we manage this work environment? What are the possible trade-offs? Should we try to arrange for infrequent but long interruptions or should we aim for frequent but short interruptions?

Let’s rephrase the problem generically in terms of machines. Suppose that a machine processes a job in a random run-time, ![]() , with mean

, with mean ![]() and variance

and variance ![]() , but with an otherwise general distribution. The machine fails with independent and identical exponentially distributed inter-arrival times. The mean time between failures is

, but with an otherwise general distribution. The machine fails with independent and identical exponentially distributed inter-arrival times. The mean time between failures is ![]() . When the machine is down from failure, it takes a random amount of time,

. When the machine is down from failure, it takes a random amount of time, ![]() , to repair. The machine failure interrupts a job in progress and, once repaired, the machine continues the incomplete job from the point of the interruption. The repair time distribution has mean

, to repair. The machine failure interrupts a job in progress and, once repaired, the machine continues the incomplete job from the point of the interruption. The repair time distribution has mean ![]() and variance

and variance ![]() but is otherwise general. The question is: What is the mean and variance of the total processing time? The solution is a bit of fun.

but is otherwise general. The question is: What is the mean and variance of the total processing time? The solution is a bit of fun.

The time to complete a job, ![]() , is the sum of the (random) run-time,

, is the sum of the (random) run-time, ![]() , plus the sum of repair times (if any). That is,

, plus the sum of repair times (if any). That is,

where ![]() is the random number of failures that occur during the run-time. First, condition

is the random number of failures that occur during the run-time. First, condition ![]() on a run-time of

on a run-time of ![]() and thus,

and thus,

Now, since ![]() is the number of failures by time

is the number of failures by time ![]() with exponentially distributed failures, this is a Poisson counting process:

with exponentially distributed failures, this is a Poisson counting process:

![Rendered by QuickLaTeX.com \begin{align*} \mathbb{E}(\tau|t) &= t + \mathbb{E}\left(\sum_{i=1}^{N(t)} R_i\right) \\ &= t + \sum_{k=0}^\infty \mathbb{E}\left[\left.\sum_{i=1}^k R_i \right| N(t) = k\right]\mathbb{P}(N(t) = k) \\ &= t + \sum_{k=1}^\infty (k\bar r) \frac{(t/\bar f)^k}{k!}e^{-t/\bar f} \\ & = t + \bar r \frac{t}{\bar f}e^{-t/\bar f} \sum_{k=1}^\infty \frac{(t/\bar f)^{k-1}} {(k-1)!} \\ &= t \left(\frac{\bar f + \bar r}{\bar f}\right) \end{align}](https://maybury.ca/the-reformed-physicist/wp-content/ql-cache/quicklatex.com-0916c4d96ee85a712b0d0198d7af0384_l3.png)

By the law of iterated expectations, ![]() , and so,

, and so,

![]()

which gives us the mean time to process a job. Notice that in the limit that ![]() , we recover the expected result that the mean processing time is just

, we recover the expected result that the mean processing time is just ![]() .

.

To derive the variance, recall the law of total variance,

![]()

From the conditional expectation calculation, we have

![]()

We need ![]() . For fixed

. For fixed ![]() , we use the Laplace transform of the sum of the random repair times,

, we use the Laplace transform of the sum of the random repair times, ![]() , that is,

, that is,

![Rendered by QuickLaTeX.com \begin{align*} \mathcal{L}(u) = \mathbb{E}[\exp(uS)] &= \mathbb{E}\left[\exp\left(u\sum_{i=1}^{N(t)} R_i\right)\right] \\ &= \sum_{k=0}^\infty \left[(\mathcal{L}_r(u))^k | N(t) =k \right] \frac{(t/\bar f)^k}{k!}e^{-t/\bar f} \\ &= \exp\left(\frac{t}{\bar f}(\mathcal{L}_r(u) -1)\right), \end{align}](https://maybury.ca/the-reformed-physicist/wp-content/ql-cache/quicklatex.com-578ae091576f08c7ece9087bc7d303b4_l3.png)

where ![]() is the Laplace transform of the unspecified repair time distribution. The second moment is,

is the Laplace transform of the unspecified repair time distribution. The second moment is,

![]()

We have the moment and variance relationships ![]() and

and ![]() , and thus,

, and thus,

![Rendered by QuickLaTeX.com \begin{align*} \mathbb{E}[\text{Var}(\tau|T=t)] &= \mathbb{E}[\text{Var}(t + S)|T=t] \\&= \mathbb{E}[\mathcal{L}^{\prime\prime}(0) - (\mathcal{L}^\prime(0))^2] \\ &= \mathbb{E}\left[ \left(\frac{t\bar r}{\bar f}\right)^2 + \frac{t(\sigma_r^2 + \bar r^2)}{\bar f} - \left(\frac{t\bar r}{\bar f}\right)^2 \right] \\ & = \mathbb{E}\left[\frac{t(\sigma_r^2 + \bar r^2)}{\bar f}\right] = \frac{\bar t(\sigma_r^2 + \bar r^2)}{\bar f} . \end{align}](https://maybury.ca/the-reformed-physicist/wp-content/ql-cache/quicklatex.com-13585279e8c56f48887ace3351029543_l3.png)

The law of total variance gives the desired result,

![Rendered by QuickLaTeX.com \begin{align*} \text{Var}(\tau) &= \text{Var}(\mathbb{E}(\tau|t) ) + \mathbb{E}[\text{Var}(\tau|t)] \\ & = \left(\frac{\bar r + \bar f}{\bar f}\right)^2\sigma_t^2 + \left(\frac{\bar t}{\bar f}\right) \left(\bar r^2 + \sigma_r^2\right) \end{align}](https://maybury.ca/the-reformed-physicist/wp-content/ql-cache/quicklatex.com-04a53b07232c2f08ab3bb22bf1d49962_l3.png)

Notice that the equation for the total variance makes sense in the ![]() limit; the processing time variance becomes the run-time variance. The equation also has the expected ingredients by depending on both the run-time and repair time variance. But the equation also has a bit of a surprise, it depends on the square of the mean repair time,

limit; the processing time variance becomes the run-time variance. The equation also has the expected ingredients by depending on both the run-time and repair time variance. But the equation also has a bit of a surprise, it depends on the square of the mean repair time, ![]() . That dependence leads to an interesting trade-off.

. That dependence leads to an interesting trade-off.

Imagine that we have a setup with fixed ![]() ,

, ![]() , and

, and ![]() , and fixed

, and fixed ![]() but we are free to choose

but we are free to choose ![]() and

and ![]() . That is, for a given mean total processing time, we can choose between a machine that fails frequently with short repair times or we can choose a machine that fails infrequently but with long repair times. Which one would we like, and does it matter since either choice leads to the same mean total processing time? At fixed

. That is, for a given mean total processing time, we can choose between a machine that fails frequently with short repair times or we can choose a machine that fails infrequently but with long repair times. Which one would we like, and does it matter since either choice leads to the same mean total processing time? At fixed ![]() we must have,

we must have,

![]()

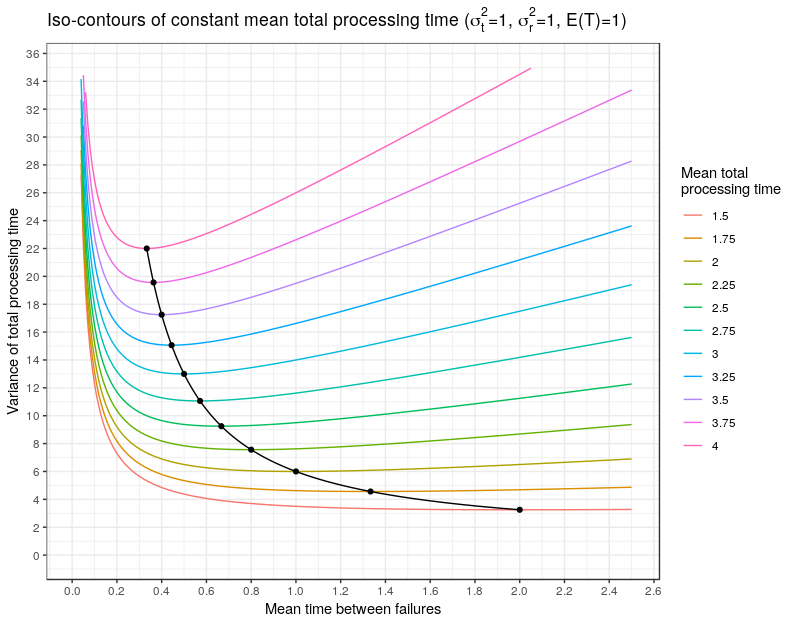

for some constant ![]() . But since the variance of the total processing time depends on

. But since the variance of the total processing time depends on ![]() , different choices of

, different choices of ![]() will lead to different total variance. The graph shows different iso-contours of constant mean total processing time in the total variance/mean time between failure plane. Along the black curve, we see that as the mean total processing time increases, we can minimize the variance by choosing a configuration where the machine fails often but with short repair times.

will lead to different total variance. The graph shows different iso-contours of constant mean total processing time in the total variance/mean time between failure plane. Along the black curve, we see that as the mean total processing time increases, we can minimize the variance by choosing a configuration where the machine fails often but with short repair times.

Why is this important? Well, in a queue, all else remaining equal, increasing server variance increases the expected queue length. So, in a workflow, if we have to live with interruptions, for the same mean processing time, it’s better to live with frequent short interruptions rather than infrequent long interruptions. The exact trade-offs depend on the details of the problem, but this observation is something that all government data scientists who are focused on improving operations should keep in the back of their mind.